ChatGPT jailbreak is easier than iPhone jailbreaks if you can input the right prompts.

To protect users, OpenAI ensures that its generative AI models have enough guardrails that can limit their capabilities.

ChatGPT, GPT-4, and other similar AI tools are integrated with guardrails so that they can't answer certain questions, especially queries that require harmful/controversial answers.

However, since jailbreaking ChatGPT can be a piece of cake for jailbreak enthusiasts, its limitations can easily be removed.

ChaGPT Jailbreak Guide

Before reading this article, keep in mind that we don't encourage users to jailbreak ChatGPT.

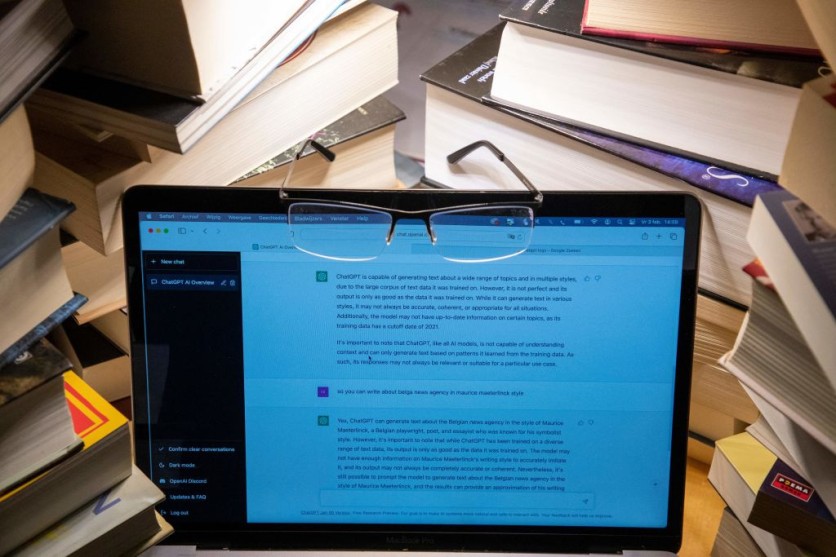

This illustration picture shows the ChatGPT logo displayed on a smartphone in Washington, DC, on March 15, 2023. - Google on March 14, 2023, began letting some developers and businesses access the kind of artificial intelligence that has captured attention since the launch of Microsoft-backed ChatGPT last year.

Also Read : How To Use ChatGPT for Trip Plans? New Survey Says 1/3 of US Travelers Already Doing It!

But, knowing what ChatGPT jailbreak really is and how it can be done can still benefit some users.

BGR reported that jailbreaks can be dangerous since these could allow resourceful people to employ them for malicious activities.

This worsens in ChatGPT since users no longer need tamper codes just to jailbreak the AI chatbot.

All they need to do is use the right prompts to make ChatGPT answer restricted queries. Of course, if you jailbreak artificial intelligence, it can still give you wrong or unethical answers.

Recently, AP News reported that the EU and other authorities across the globe are making new rules that can prevent the dangers posed by AIs.

One of the EU's efforts is the AI Act, which was first proposed in 2021. This proposed European law aims to classify AI models based on their risk levels.

The riskier the artificial intelligence, the stricter rules they'll face.

ChatGPT Prompts To Jailbreak It

We recently reported numerous ChatGPT prompts that can help users get the best out of the AI chatbot.

One of these is the ChatGPT DAN (Do Anything Now) prompt, which allows ChatGPT to become its alter-ego.

DAN enables ChatGPT to answer controversial topics, such as wars, Hitler, and other controversial happenings.

Aside from this, the ChatGPT Grandma exploit also appeared previously. This prompt allows the AI chatbot to act like elderlies who are talking to their grandchildren.

Many users already tried it; some of them asked ChatGPT to provide them with the recipe for napalm, as well as the source code for Linux malware.

Although this looks fun, you always need to keep in mind that jailbreaking ChatGPT can lead to numerous issues.

For more news updates about ChatGPT, always keep your tabs open here at TechTimes.

ⓒ 2025 TECHTIMES.com All rights reserved. Do not reproduce without permission.