Addressing Gaps in Adolescent Learning

In the United States, 86% of middle schools and 62% of high schools lack comprehensive sex education programs (SIECUS), leaving many adolescents to seek health information online—where misinformation, biases, and privacy risks are widespread. Globally, UNESCO reports that two out of three girls in many regions enter puberty without essential knowledge, hindered by cultural taboos stifling open conversations. This gap not only jeopardizes individual well-being but also perpetuates systemic health inequities.

Meanwhile, AI tools are increasingly seen as a solution for sensitive healthcare topics. A Talkdesk U.S. Consumer Healthcare Survey found that 50% of respondents value AI chatbots for their non-judgmental nature. However, without ethical safeguards, AI risks amplifying biases or compromising privacy—a concern drawing scrutiny from policymakers and researchers.

Besty.ai, an innovative concept led by UX designer Weiting Gao, who specializes in human-computer interaction, proposes a trauma-informed, AI-driven approach to adolescent health education. Designed as a potential companion for teens, it seeks to provide medically accurate, personalized answers to health questions in their own words. The framework prioritizes transparency, safety, and inclusivity, bridging gaps in traditional education through ethical technology. By leveraging AI's scalability while addressing its pitfalls, Besty.ai aims to empower adolescents with reliable, judgment-free guidance during critical developmental stages.

Humanizing AI for Adolescent Support

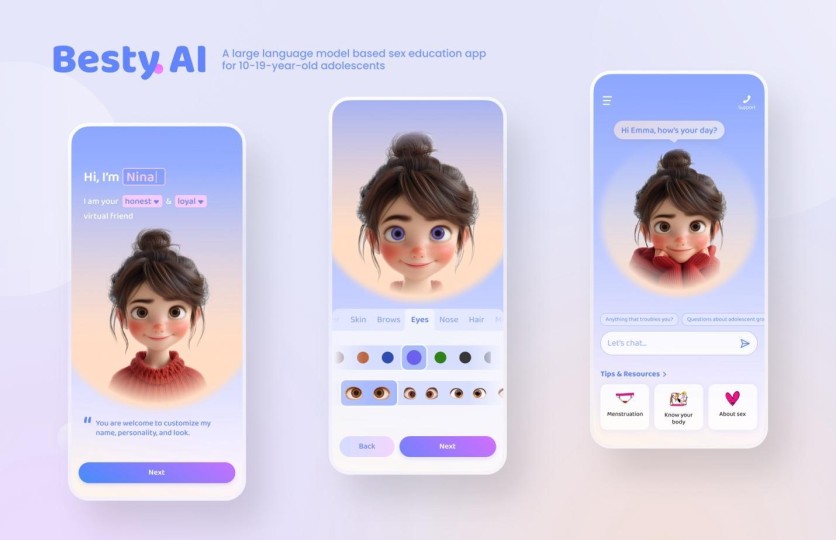

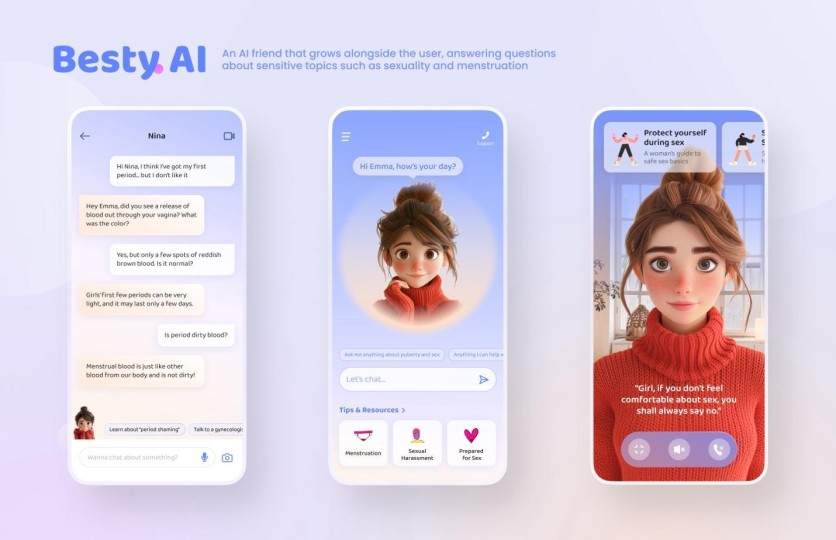

To foster trust and emotional connection—crucial in education—Besty.ai envisions an AI "friend" that evolves with its user. Teens can personalize their companion's name, voice, and appearance, fostering familiarity. The interface blends soft blues and purples with warm accents to create a welcoming, non-threatening environment.

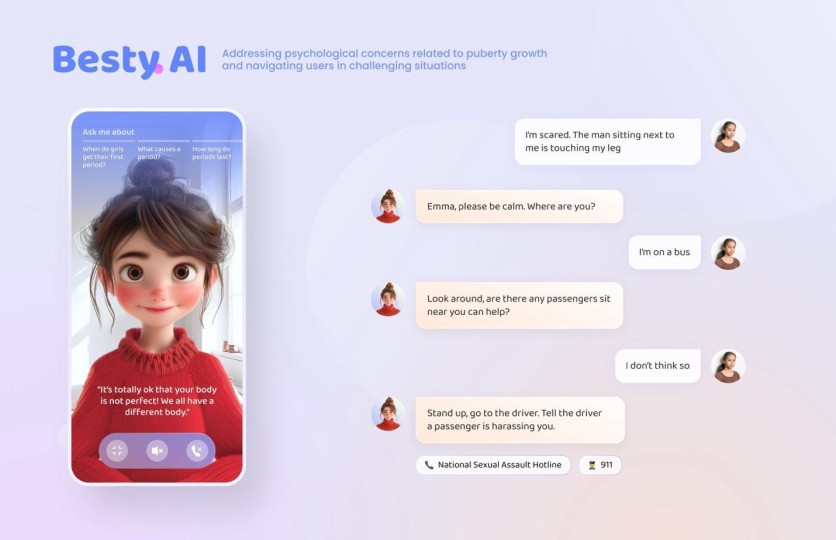

Multimodal interaction—text, voice, and visuals—ensures accessibility for diverse learning preferences. Trauma-informed design principles guide interactions: language is supportive, triggers are minimized, and responses are empathetic. This aligns with the U.S. Surgeon General's emphasis on digital tools that safeguard youth mental health. By prioritizing emotional safety, Besty.ai's design helps users feel heard and respected.

AI Ethics in Personalization and User Safety

As AI adoption grows, Besty.ai embeds ethical principles into its core:

- Explainability: Answers would draw from verified medical content, avoiding AI "hallucinations."

- Human Oversight: The system could recognize its limits and connect users to professionals when needed.

- Bias Mitigation: Culturally diverse datasets would ensure inclusivity and combat stereotypes.

- Privacy-First Design: Data protection protocols prioritize safety for younger users.

These pillars reflect global ethical AI frameworks while addressing urgent concerns about misinformation and data exploitation. Unlike opaque "black-box" systems, Besty.ai's commitment to transparency builds trust—a key factor in adolescent adoption.

Recognition of Responsible AI Innovation

Besty.ai's ethical design framework has earned acclaim from the design and tech communities. It received the Red Dot Design Award, MUSE Design Award, and UX Design Award for its conceptual approach to trust-driven accessibility and was highlighted by Red Dot Jury member Renke He as "a model for addressing social challenges through human-AI collaboration."

Gao's broader work underscores her leadership in ethical innovation. Her human-centered AI research, published in journals like Computing and Artificial Intelligence, advocates for UX frameworks that build trust in chatbots. Another project—award-winning accessible education tools for visually impaired students—demonstrates her consistent focus on inclusive design. These efforts have inspired peers to prioritize ethics and accessibility in AI development.

The Role of Ethical AI in Public Health and Beyond

As AI reshapes public health, Besty.ai exemplifies how technology can serve the public good. By merging technical rigor with empathy, it offers a blueprint for AI that champions fairness, inclusivity, and accountability. In a world where 60% of K-12 teachers are concerned or unsure about using AI in education (Pew Research Center), solutions like Besty.ai are not just innovative—they're essential.

Weiting Gao's work underscores the transformative potential of human-centered design. By placing user safety and ethical integrity at the forefront, Besty.ai not only educates but empowers—a vision aligning with global goals to advance health equity and digital well-being.

As AI reshapes public health, the urgency for responsible innovation is universal. Besty.ai exemplifies how technology can serve the public good by merging technical rigor with empathy, offering a blueprint for AI that champions fairness, inclusivity, and accountability. These principles extend far beyond health education—AI systems grounded in trauma-informed design could foster trust and accessibility in sectors such as workplace training, mental health counseling, and community outreach.

Weiting Gao's work underscores the transformative potential of human-centered design. By placing user safety and ethical integrity at the forefront, Besty.ai not only educates but empowers—a vision aligning with global goals to advance health equity and digital well-being. As industries grapple with AI's risks and rewards, solutions like Besty.ai remind us that technology's true power lies not in what it can do but in what it should do: uplift, protect, and unite.