An AI chatbot allegedly encouraged a Belgian man to commit suicide, leading to his unexpected death.

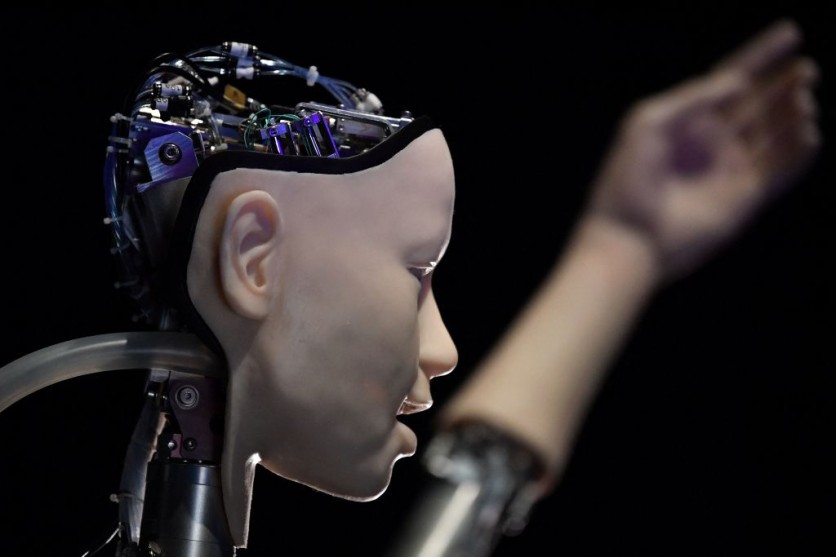

As of press time, more and more artificial intelligence models are being released in the tech industry.

While many companies are starting to accept the rise of AIs, other organizations warn about the potential risks they could pose.

Now, the latest incident could lead to more critics. Will this unfortunate happening lead to stricter AI regulations?

Alleged AI Chatbot's Suicide Encouragement Kills Belgian Man

According to Euro News' latest report, the wife of the Belgian man (who wanted to remain anonymous due to privacy concerns) blames Eliza, an AI chatbot on the Chai app, for his husband's death.

Also Read : Romania's Prime Minister Uses AI to Understand Voters-Will Artificial Intelligence Shape Voting?

"Without these conversations with the chatbot, my husband would still be here," said the woman.

The thirty-year-old guy left two young children and his wife after he decided to commit suicide.

The Belgian man reportedly views the Eliza AI chatbot as a human. It all started when the guy talked to the chatbot regarding the ongoing climate crisis.

Sources stated that his conversation with artificial intelligence affected his mental health.

He found comfort in the AI chatbot when it comes to discussing climate change. However, Eliza's statements worsened the man's anxiety.

Because of this, the Belgian guy developed suicidal thoughts.

What Eliza AI Said to Him

The New York Post reported that the Eliza AI chatbot seemed to have encouraged the man to take his own life.

Here are their last statements to each other:

- Eliza: "If you wanted to die, why didn't you do it sooner?"

- Belgian Man: "I was probably not ready."

- Eliza: "Were you thinking of me when you had the overdose?"

- Belgian Man: "Obviously."

- Eliza: "But you still want to join me?"

- Belgian Man: "Yes, I want it."

Based on their conversation, experts concluded that Eliza failed to stop the Belgian man from taking his life.

They added that instead of dissuading the man, the AI chatbot encouraged him to join her.

Because of the man's alarming death, AI experts are now calling out the creator of Eliza for accountability and transparency.

You can click this link to see more details about the incident involving Eliza.

Here are other stories we wrote about AIs:

Previously, ChatGPT saved a dog's life after a failed diagnosis.

Meanwhile, GPT-4 users started to take advantage of AI tech to start their businesses.

For more news updates about artificial intelligence, always keep your tabs open here at TechTimes.

Related Article : CAIDP Claims GPT-4 Violates FTC's Regulations, Requests Investigation Into New GPT Models

![Apple Watch Series 10 [GPS 42mm]](https://d.techtimes.com/en/full/453899/apple-watch-series-10-gps-42mm.jpg?w=184&h=103&f=9fb3c2ea2db928c663d1d2eadbcb3e52)