Tesla's Autopilot was fool-proof and promising, as advertised by the company; still, Israel-based researchers found its ultimate flaw and weakness using 'phantom' images or instant apparitions to mislead the car. Tesla's electric vehicles and other full self-driving (FSD) cars are at risk with these findings, able to cause an instant stop for the car.

Israeli Researchers recently found a flaw in Tesla's Autopilot, but the team is not limiting the study to the Palo Alto giant, as these findings threaten other FSD or semi-Autopilot vehicles. Great caution and care were advised to owners of vehicles with Autopilot systems as human attention is still needed on the road.

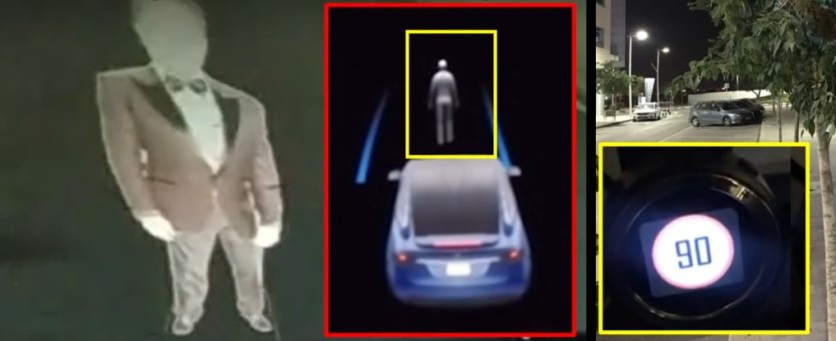

Split-second objects that are mostly unseen by the naked eye can cause the systems to malfunction and be fooled into stopping. While this may seem minor to most drivers focusing on the driving situation, this can be fatal to those preoccupied and those closely tailing.

According to Wired, this study was primarily concerned with Tesla Autopilot's safety in question since deadly accidents occurred while using the feature. The findings in the study can also be used against Tesla's vehicles and its drivers.

The research was intended to identify what Tesla's Autopilot can see and perceive using its multiple cameras enveloping the car's body. This information is completely opposed to the feature of Tesla's Autopilot that aims to 'see' the blind spots and other factors that humans would perceive slowly.

ALSO READ: SpaceX's Rocket System will Soon Deliver US Military Weapons Acrosss the Globe in Just an HOUR!

Tesla's Autopilot: Debunked by Israeli Study

The public sees Tesla as 'untouchable' or flaw-free with its vehicles; However, as the company may bombard its vehicles with all the flashy features and latest technology, it does not mean that it is a perfect vehicle. Tesla admits its mistakes and upgrades from it, a testimonial from its CEO and co-founder, Elon Musk.

A group of researchers from Israel's Ben Gurion University of the Negev began the study in 2018, called the now-published paper 'Phantom of the ADAS.' Advanced Driver-Assistance Systems (ADAS) are what the group calls the autopilot systems found on several vehicle manufacturers' technology.

Despite the flaws of the ADAS or FSD present on the vehicles' technological features, this study aims to empower them by providing an objective study, reminding the manufacturers of what to improve on its systems. The researchers identified what can fool the Autopilot with the simple use of split-second light projections directly onto the vehicles' camera system.

Tesla Drivers: Focus on the Road even with Autopilot on

As different malicious entities are present in society, public hacking systems are becoming more apparent these days even in the online world. Hackers are relentless to cause chaos among the society and the public. The researchers are speculating that it is not long before they take advantage of Tesla's flaws.

The study also found that displaying manipulative images of pedestrian and road signs on highway billboards can also trick the car's cameras and systems. This is dangerous for the vehicle's passengers and driver, along with other cars on the road. This form of hacking can leave little evidence that can be untraceable.

With Tesla's FSD and other vehicle's autopilot systems exposed, drivers with ASAD able cars are advised to observe caution and focus on the road at all times.

ALSO READ: Martin Eberhard Did Not Found Tesla: Elon Musk Explains Why He Only Invests 1%

This article is owned by Tech Times

Written by Isaiah Alonzo