Transferring large amounts of data quickly and efficiently is a common challenge in enterprise data management. Samrat Aich, with nearly twenty years of IT experience, has addressed this issue by developing a method to enhance data transfer speeds and establish new standards in enterprise storage solutions.

Overcoming Limitations of Legacy Storage Systems

Legacy storage systems have long posed challenges for enterprise IT departments. Slow data transfer rates, inefficient resource use, and difficulty scaling with growing data volumes have affected productivity and increased costs. Maintaining these systems can also be costly and labor-intensive.

"Finding a way to move large amounts of data quickly and efficiently has been a priority," says Aich. The new solution aims to streamline operations and ease the burden on IT departments.

Aich's method involves using data migration tools and then deploying proxies (WAN Accelerators) at both source and destination data centers, departing from traditional practices. This approach has led to faster data transfer speeds, specifically over wide area networks (WANs), often facing latency and bandwidth issues, acting as the bottleneck. The use of proxies helps optimize the transfer process, ensuring smoother and quicker data movement between distant locations.

In one project, Aich's team achieved notable transfer rates between data centers in Texas and California, surpassing their expectations. This success shows the new method's effectiveness and potential to improve data management practices. With faster, more efficient data transfers using regular data migration tools and additional proxies at the source data centers, enterprises can more easily migrate data, back up critical information, and maintain operations during large-scale transfers.

Optimizing Bandwidth and Reducing Latency

This method optimizes data replication between two different file storage platforms separated by a long distance by utilizing the same available bandwidth, which can be challenging to increase. Instead of expanding bandwidth, this approach focuses on optimizing the existing capacity.

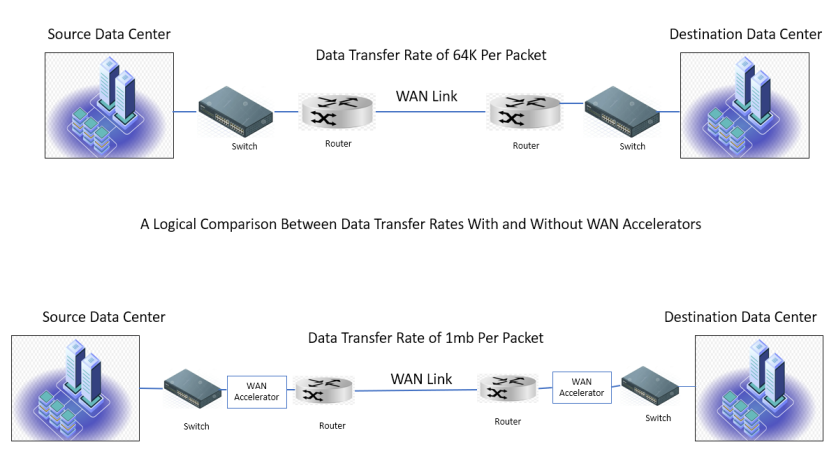

In cross-site replication over a WAN, data transfers occur in 64K packet sizes. Each packet must be successfully transferred and acknowledged before the next packet is sent. For large data sets, this process introduces significant latency, delaying the overall transfer. The accompanying diagram illustrates data transfer rates between the source and destination with and without WAN optimization.

To address latency, delays, and losses, WAN accelerators can be deployed at both locations, as shown in the second diagram. These accelerators, installed at both ends of the router and switch, compress data at the source. This compression effectively doubles the speed and reduces round-trip times by minimizing data size, thus saving space, bandwidth, and transmission time.

Modern replication tools consist of clusters with clients and a server. Clients handle the heavy lifting, while the server manages workload distribution for data replication between different storage vendors. By setting up additional clients as proxies behind WAN accelerators at both the source and destination, speed can be increased and errors reduced. Some modern tools come with built-in WAN accelerators, which, if configured and enabled, can perform efficiently even without a physical hardware WAN accelerator. However, for optimal performance, replication tool clusters should be deployed across both the source and destination rather than just at the target.

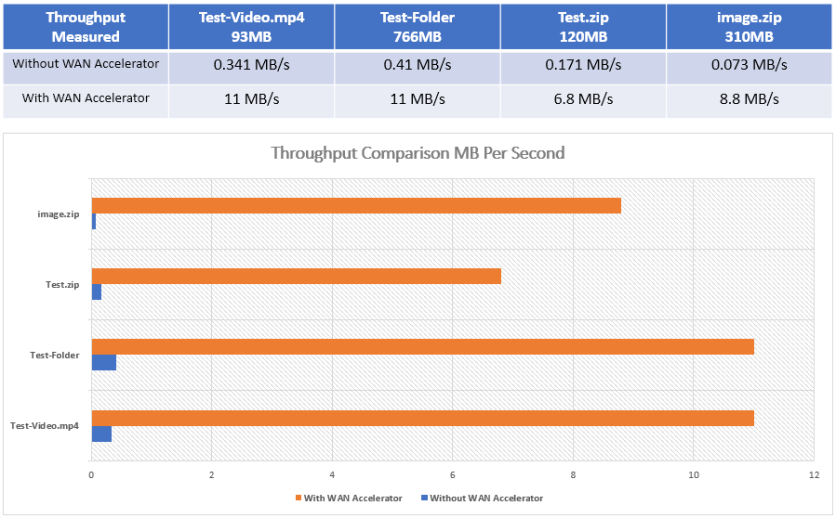

A test was conducted to compare data replication from a source to a target separated over a long distance. The test used replication tool clients or proxies implemented behind the WAN accelerator at the source and with some clients at the target, functioning as a single cluster.

Data compression occurs only on the WAN path and decompresses automatically upon receipt at the target, making the network more efficient by saving space for other packets and reducing the total time taken for large data transfers over the WAN.

The test validated this methodology with two scenarios:

- The replication tool cluster at the target location copied data from the source data center.

- The replication tool cluster at the target data center included additional clients or proxies deployed at the source data center.

The tests were performed on the following data types:

- A video file (.mp4) with a size of 93 MB.

- A folder containing system files with a size of 766 MB.

- A zip file with 120 MB, created from office files.

- A zip file with 310 MB, created from a collection of images.

Boosting Data Transfer Efficiency

The data transfer rates with WAN optimization significantly increase the throughput.

Overall, this method offers a practical solution to the challenges of achieving higher data transfer rates between multi-vendor storage systems during data migration over a WAN. Improved transfer speeds enhance productivity, reduce costs, and better prepare businesses for future growth.

Test results concluded this methodology and led to the use of WAN accelerators to boost the speed of replication between distant locations connected over a WAN. However, the type of data, the distance between the two data center locations, and the available bandwidth may cause these results to vary.

Cloud Integration: The Key to Flexible Enterprise Storage

Hybrid cloud strategies require integrating on-premises and cloud storage. "The future of enterprise storage lies in hybrid solutions," Aich says. "Cloud storage and smart data management can help organizations handle increasing unstructured data. Smart data management improves the overall data lifecycle, easing the management of sprawling data in the long run for enterprises."

Aich's method speeds up data migration to the cloud and improves data organization. This helps companies reduce storage costs by moving less frequently used data to cheaper cloud storage, with a retention policy, after which the archived data gets disposed of while keeping important data accessible on-premises.

AI-Powered Operations in Storage Management

Aich integrates artificial intelligence for IT operations (AIOps) into his approach. Using AI and machine learning algorithms, his solution predicts storage needs, automates routine tasks and identifies potential issues before they impact operations. "AIOps is not just about automation," Aich says. "It's about creating a storage system that learns and adapts to an organization's data patterns and requirements to operate at its optimum."

This AI-driven method has improved storage efficiency and reliability. Organizations using Aich's solutions have experienced less downtime, better resource allocation, and enhanced data protection.

Cost-Effective Strategies for Storage Transformation

Samrat Aich's approach to enterprise storage solutions addresses the ongoing challenges IT departments face. He updates legacy systems, integrates cloud storage, and uses AI to develop methods that improve efficiency and reduce costs, helping organizations prepare for future growth.

With the growing demands for data management, Aich's contributions provide a helpful business framework. "Our goal is to eliminate bottlenecks in data transfer and create a seamless storage experience," Aich says. His works continue to drive advancements in the field, offering organizations the tools they need to thrive.

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.