Artificial intelligence is becoming an increasingly significant asset for companies worldwide, especially as they integrate generative AI features like chatbots into their services. However, deploying the large language models (LLMs) that power these features can be a complicated task.

AI engineer Michael Feil is taking aim at this challenge with his latest project—an open-source project called Infinity. This application programming interface (API) enhances the integration of embedding and reranking models (a subset of large language models built to retrieve, rank, and filter information) for organizations with limited infrastructure.

Learn more about Feil and how Infinity is helping to expand the capabilities and accessibility of artificial intelligence.

The Role of Large Language Models

80% of global companies are working with generative AI to deploy tools like chatbots and virtual assistants. These systems rely on LLMs, which are computational models that have been exhaustively trained on human text—to the point that the model can recognize and interpret real-world language.

LLMs run on a set of neural networks that consist of an encoder and/or decoder, which extract meanings from text and interpret the relationships between words and phrases. They also rely on self-attention mathematics, which essentially improves how the model detects how elements relate to each other. Some LLMs (like LLama-3) use only a decoder, while some (like BERT) use only an encoder. LLMs like BART and T5 use both an encoder and a decoder.

The tools created with this technology have made a significant impact on the world, with examples like OpenAI's ChatGPT seeing exponential growth after publicly launching in November 2022. However, the notorious problems that come with these tools are widely known. Issues like inaccurate data, unsourced information, and even what the industry calls "hallucinations" are widely recognized faults that make many think twice before using generative AI for real-world applications.

To this end, many AI tools have evolved to compound AI systems in order to leverage up-to-date information, make proper citations, and improve overall reliability. These systems are notable in that they include multiple calls to models, retrievers, or external tools—supplementing the LLM's work with additional resources that improve the model's results.

One of the most efficient examples of a compound AI system is retrieval augmented generation (RAG), which leverages vector embeddings (a numerical representation of data like text that retains semantic relationships and similarities). With RAG, the model retrieves relevant information from various sources and integrates it into the LLM's query input, allowing for more precise responses.

A key technology that improves upon this methodology is vector search. This technique essentially creates a mathematical map of data in vector space, allowing computers to analyze relationships in a dataset better, make predictions, and quickly and accurately find specific sets of information that are the most closely related to a query. It accomplishes this by ranking documents according to their relative distance in the vector, and if this distance calculation and document caching is performed in a specialized vector database, this compound AI system is substantially more feature-rich, adaptive, fast, and accurate than providing all possible information directly to the LLM.

However, RAG can be an intense computational process and requires a robust infrastructure capable of storing and running the vector database, decoder, encoder, and user interface. This infrastructure must be able to host multiple users concurrently, update new documents and their vector representations, keep track of all generated vector embeddings, and retrieve relevant data with minimal delay.

To this end, Michael Feil aims to streamline the deployment of encoder LLMs within compound AI Systems with Infinity.

Meet Michael Feil

After getting his bachelor's in Mechatronics and Information Technology and his master's in Robotics, Cognition, and Intelligence from TU Munich, Michael Feil landed his first job as a machine learning engineer at Rohde & Schwarz. It was there that he worked on LLM development, including leading projects for LLM-powered code generation for software development and building up the organization's infrastructure for running compound AI systems.

He performed this work right in the heat of the AI boom—his work coincided with the release of ChatGPT, a significant AI milestone that thrust LLM expertise into high industry demand. However, OpenAI had more capable models than open-source models, not to mention the robust infrastructure that composes these services. To close the gap, Feil worked on projects for HuggingFace and ServiceNow, providing inference code for their flagship model, StarCoder.

One notable example of Feil's early work was to integrate StarCoder into vLLM, an engine that leverages advanced memory management algorithms to expand the inference and memory allocation capacities of LLMs and allow them to process more user requests. Since its release, vLLM has become one of the most used engines across the industry, and it's currently being leveraged by major companies like AWS, Databricks, Google Cloud, and Anyscale for text-generation tools like chatbots.

Over this time and while working on various open-source projects, Feil became familiar with the inner workings of LLM inference (generating new data or predictions based on a model's learned patterns) and realized that there was no truly accessible system for encoder LLMs that allow inference custom-tailored for RAG.

Enter Michael Feil's Infinity.

Enhancing AI Infrastructure with Infinity

In October 2023, Feil released Infinity, a Python-based API designed to allow organizations with limited infrastructures to integrate vector embeddings and reranking LLMs into their compound AI Systems.

Infinity leverages a range of machine-learning techniques to speed up computation and allow more concurrent requests. For example, it uses dynamic batching, which collects and stores incoming embedding requests in a holding pattern while the encoder is at total capacity. It then organizes these requests based on their complexity (measured by aspects like prompt length) and allocates them into queued batches.

Infinity also uses a custom version of FlashAttention, an algorithm that combats the memory bottleneck caused by AI advancements that enhance computing power but not memory and data transference. With this modified version of FlashAttention, Infinity allows the encoding of multiple requests with variable length and at no overhead.

The result of these techniques is up to 22x higher throughput than baseline results.

Infinity can be easily integrated into a wide range of text embedding models—including all models from the Sentence Transformers library and those featured on the Multilingual Text Embedding Benchmark, which ranks the top-performing LLMs on the open-source market. It can orchestrate multiple models at once and aligns with OpenAI's API specifications, allowing engineers to easily integrate it into existing projects such as LangChain.

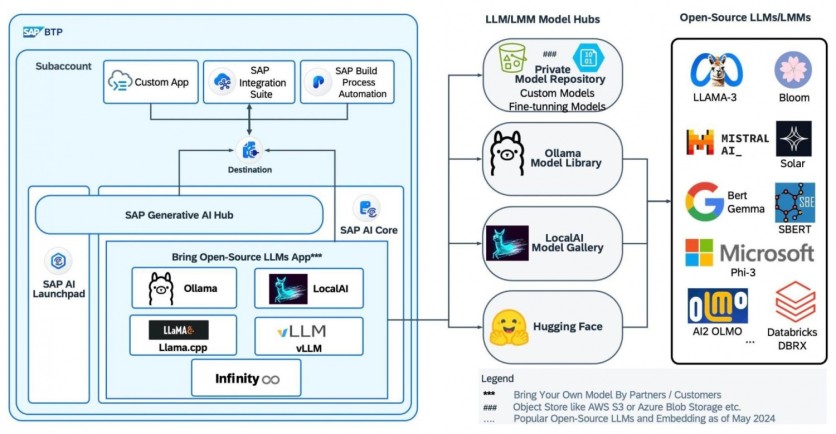

Feil published Infinity as an open-source project on GitHub, where it gained over 1,200 stars in nine months and over 80 forks. It was soon adopted by cloud-based tech companies such as SAP, Runpod, RAGFlow, and TrueFoundry. Thanks to its popularity, similar open-source projects (such as HuggingFace's Text Embeddings Inference and Qdrant's FastEmbed) were released soon afterward to continue developing this infrastructure.

Michael Feil's Ongoing Work

After releasing Infinity, Feil transitioned to working as an engineer at Gradient, a San Francisco tech company focused on AI automation. There, he continues to create open-source LLM models like Llama-3 8B Gradient Instruct 1048k, a model that integrates with Meta AI's recently released LLaMA-3 model and extends its context length capacity from 8,192 to 1,048,576 tokens. This project got over 150,000 downloads in just over three months, and it was the highest-trending open-source model on HuggingFace at the time of its release.

Feil has also been invited to discuss Infinity on mediums like the engineering podcast "AI Unleashed," Munich NLP's webinars, as well as webinars run by Run.ai, an AI optimization company that's now part of Nvidia. To this day, all of Feil's projects remain open source—free for any engineer to develop, extend, and deploy on their own project.

Infinity's ability to enhance one of the most critical components of generative AI is just one example of how Michael Feil is working to expand this rapidly expanding industry. Visit his open-source GitHub repository to explore more of his ongoing work.

![Apple Watch Series 10 [GPS 42mm]](https://d.techtimes.com/en/full/453899/apple-watch-series-10-gps-42mm.jpg?w=184&h=103&f=9fb3c2ea2db928c663d1d2eadbcb3e52)