Artificial intelligence startup Groq has landed $640 million in its latest funding round, further fueling its hopes of competing with Nvidia.

Blackrock was said to have spearheaded the latest investment round. Neuberger Berman, Type One Ventures, Cisco, KDDI, and Samsung Catalyst Fund were also involved in the participation.

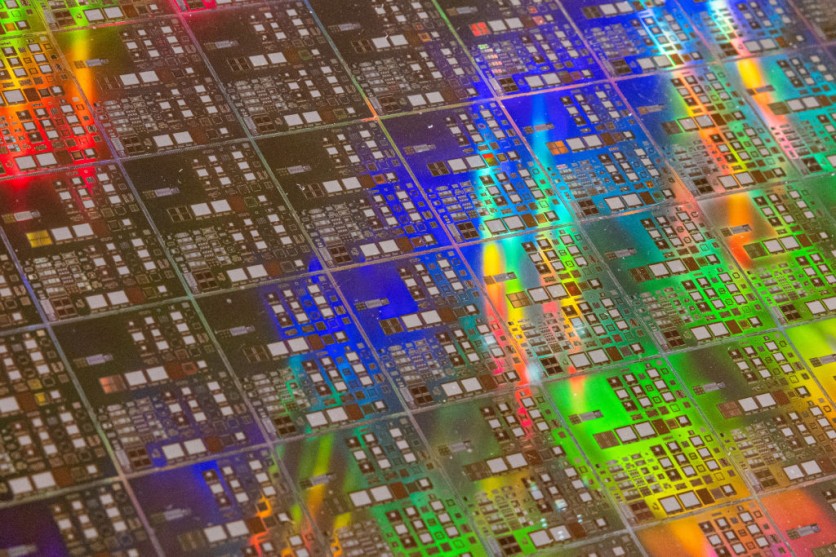

(Photo : Annabelle Chih/Getty Images)

Semron, a German startup founded by Dresden University alumni, pioneers 3D-scaled AI chips using memcapacitors, promising energy-efficient, cost-effective AI on mobile devices.

The funding round for Groq, totaling more than $1 billion and valuing the company at $2.8 billion, is seen as a big success. Initially, Groq aimed to raise $300 million at a slightly lower valuation of $2.5 billion. It increased Groq's previous valuation by more than two times, which was approximately $1 billion in April 2021, after the company secured $300 million in funding from Tiger Global Management and D1 Capital Partners.

Groq announced today that Yann LeCun, Meta's top AI scientist, will act as a technical consultant, while Stuart Pann, former head of Intel's foundry business and ex-CIO at HP, will become the startup's new COO. LeCun's selection is somewhat surprising, considering Meta's focus on its own AI processors - yet it provides Groq with a strong supporter in a competitive industry.

Groq Explainer

Groq, as per Tech Crunch, came out of hiding in 2016, is developing an LPU (language processing unit) inference engine. The company states that their LPUs can operate current generative AI models like OpenAI's ChatGPT and GPT-4o but 10 times faster and using only one-tenth of the energy.

Jonathan Ross, the CEO of Groq, is known for his contribution to the creation of the tensor processing unit (TPU), Google's specialized AI accelerator chip for model training and implementation. Ross and Douglas Wightman partnered up nearly ten years ago to establish Groq, with Wightman being an entrepreneur and former engineer from Alphabet's X moonshot lab at Google.

Groq offers GroqCloud, a developer platform powered by LPU, which includes "open" models such as Meta's Llama 3.1 family, Google's Gemma, OpenAI's Whisper, and Mistral's Mixtral. It also provides an API for customers to integrate its chips into cloud instances.

Nvidia Competitors

There are numerous competitors, like Groq, attempting to outperform Nvidia. In a recent ISC conference presentation, Samsung inadvertently disclosed its intentions for a RISC-V CPU/AI accelerator. This accelerator could be created to directly rival Nvidia's leading AI chip manufacturer, the H-series GPU.

The incident took place at a Unified Acceleration Foundation event named "Unlocking the Next 35 Years of Software for HPC and AI," which featured mention of a Samsung "RISC-V CPU/AI accelerator."

This has sparked speculation about the South Korean tech company's plans to integrate RISC-V architecture into its upcoming technologies, specifically the Mach-1 AI accelerator processor, scheduled for launch in the first half of 2025.

Samsung is part of the steering committee for the Unified Acceleration Foundation (UXL). In simpler terms, UXL designs software to enhance AI accelerators that serve as options for Nvidia's CUDA and do not rely on Nvidia's GPUs.

Samsung officially introduced its AI processor, Mach-1, on March 25. Samsung has discussed memory processing extensively.

The AI chip is set to be released in early 2025. It will be released after entering production by the end of the year. The target market is expected to consist of edge applications that have low power consumption needs.

![Apple Watch Series 10 [GPS 42mm]](https://d.techtimes.com/en/full/453899/apple-watch-series-10-gps-42mm.jpg?w=184&h=103&f=9fb3c2ea2db928c663d1d2eadbcb3e52)