Two weeks after Microsoft introduced 'Recall' as part of the Copilot+ PC experience on Windows, the service faces massive backlash and criticism about its privacy and security risks. In several cases, it was proven that user data is not safe on Copilot's Recall, with many showing how easily they could obtain data from the service and pose dangers to a user.

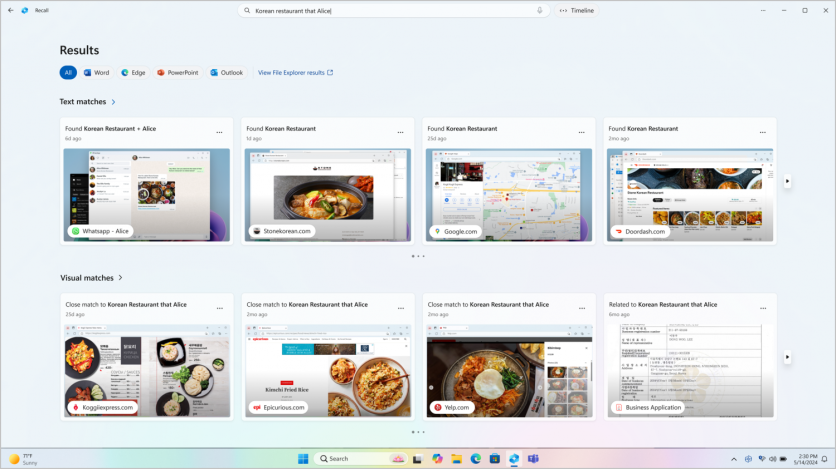

Microsoft's Recall was made to help users remember many things from their computer use, and with a simple command to the AI, they may bring back the experience easily.

Microsoft Windows Recall Now Worries Security Experts

Many privacy experts and security researchers have raised concerns about Microsoft's Windows Recall, a recent feature introduced alongside Copilot+ towards the end of May. Despite being present for only two weeks, many are calling for its Recall, with Microsoft seeing massive pressure not only from these experts but also probes from government agencies.

A recent system called Total Recall, which was recently shared on GitHub, has unveiled how easy it was for the tool to abuse Recall's features and access sensitive data and information from its users. This was made by a white-hat hacker, Alexander Hagenah, to expose its vulnerabilities.

Copilot's Recall: Privacy, Security Risks Present

Other experts also weighed in on this new Copilot+ feature, with a recent detailed analysis from Kevin Beaumont, a security expert, who claimed that there is a massive risk that could effectively trump the Copilot+ program. While Beaumont claimed that Recall could only attract a niche user base, especially with its promising experiences, he regarded it as poorly planned.

AI Data Access is a Massive Security Concern

Since AI arrived in the global technology landscape, it has raised alarms and concerns among experts and professionals who are wary of the latest experiences related to data access. There is an ongoing corporate AI race in the industry, and these decisions by massive companies may hurt everyone in the process as privacy and security risks are present.

Microsoft's renowned partner in AI developments and technology, OpenAI, is not shy about massive inquiries about security concerns and risks, with various global watchdogs calling upon the company. The EU Data Protection Task Force recently probed ChatGPT's privacy compliance but was allowed to continue its operations after releasing its preliminary findings.

The digital age has seen increased attention to privacy and security, and with AI's massive growth, it was spotlighted for these concerns. The latest from Microsoft's Copilot+ is Recall on Windows, but it is now facing massive scrutiny in the cybersecurity industry for possible data breaches and privacy risks, with Microsoft yet to acknowledge these raised concerns.

Related Article: Microsoft's New AI-Powered Recall Feature Sparks Security Concerns

![Apple Watch Series 10 [GPS 42mm]](https://d.techtimes.com/en/full/453899/apple-watch-series-10-gps-42mm.jpg?w=184&h=103&f=9fb3c2ea2db928c663d1d2eadbcb3e52)