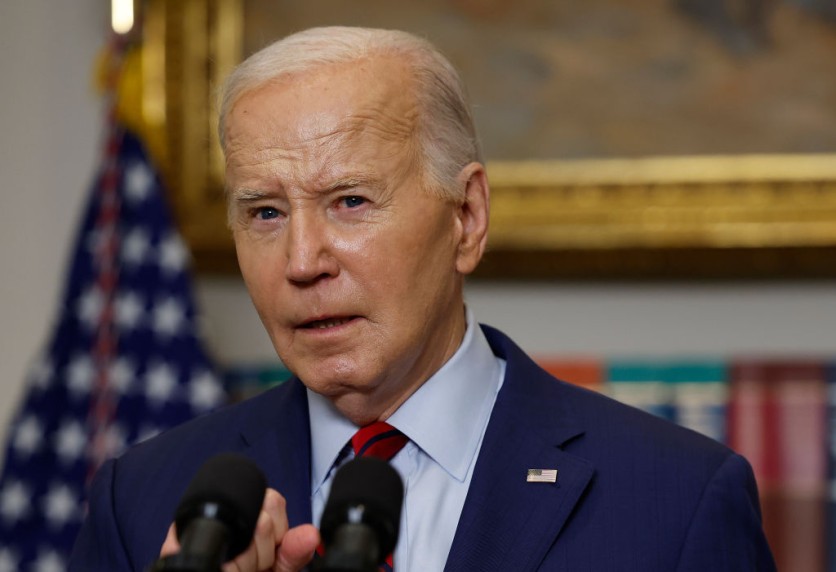

Artificial intelligence-generated deepfakes proved to be the main concern of the US Department of Justice after it decided that audio from President Joe Biden's interview with Special Counsel Rober Hur would be kept from the public.

The Justice Department's assertions follow a legal challenge against Biden's attempts to use executive privilege to conceal the audio from the public, which were made public in a court filing on Friday.

In its submission on Friday, the DOJ admitted that there is currently enough audio accessible in the public domain to produce AI deepfakes of both Biden and Hur. However, it added that disclosing the original recording would make it more challenging to refute any erroneous versions.

According to reports, the government claims that worries about malicious alteration of audio recordings are only made worse by time and advances in artificial intelligence, deep fakes, and audio technologies.

According to the DOJ, there is a good chance that the audio recording will be inappropriately altered if it is made public. The modified file may then be mistaken for an accurate recording and spread widely.

Conservative legal organizations and House Republicans are putting considerable pressure on Biden's administration to compel the release of the tape. The DOJ's transcript of the interview showed the president in several awkward situations.

DHS' Warning on AI

Concerns about AI Deepfakes are only becoming more prevalent among various government agencies. Most recently, the Department of Homeland Security issued a warning in its most current federal bulletin.

According to studies by the Department of Homeland Security, which has produced and shared with law enforcement partners across the country, foreign and local actors may use technology to create serious barriers to the 2024 election cycle.

Federal bulletins are sporadic alerts sent to law enforcement partners about specific dangers and issues. This bulletin warns that AI capabilities may facilitate attempts to influence the US election cycle in 2024.

By intensifying emergent occurrences, disrupting election processes, or focusing on election infrastructure, generative AI techniques will likely provide local and foreign threat actors with more opportunities to influence the 2024 election cycle.

Arizona Against Deepfakes

Adrian Fontes, an Arizona official, recently issued a deepfake video of himself to caution against the same technology. The Secretary of State released a movie alerting voters to the risks of spotting AI-generated deepfakes and other manufactured political content.

Fontes was the one issuing the warning in the video, but it was a deepfake of Fontes meant to highlight the caution and show how plausible AI-generated content could seem to the ignorant eye.

The introduction, description, and disclaimer of Fontes' deepfake inform viewers that it is merely an "impersonation" of a genuine person. The deepfake's strange facial expressions and robotic, stilted speech notwithstanding, the video seems rather authentic as real footage of the Arizona official.

Fontes stated that he planned to create protocols for managing fabricated content and ensure that election officials were versed in how artificial intelligence may be exploited to disseminate misleading information.

Related Article: Connecticut AI Bill on Deepfakes Stalls for Being Too Strict

![Apple Watch Series 10 [GPS 42mm]](https://d.techtimes.com/en/full/453899/apple-watch-series-10-gps-42mm.jpg?w=184&h=103&f=9fb3c2ea2db928c663d1d2eadbcb3e52)