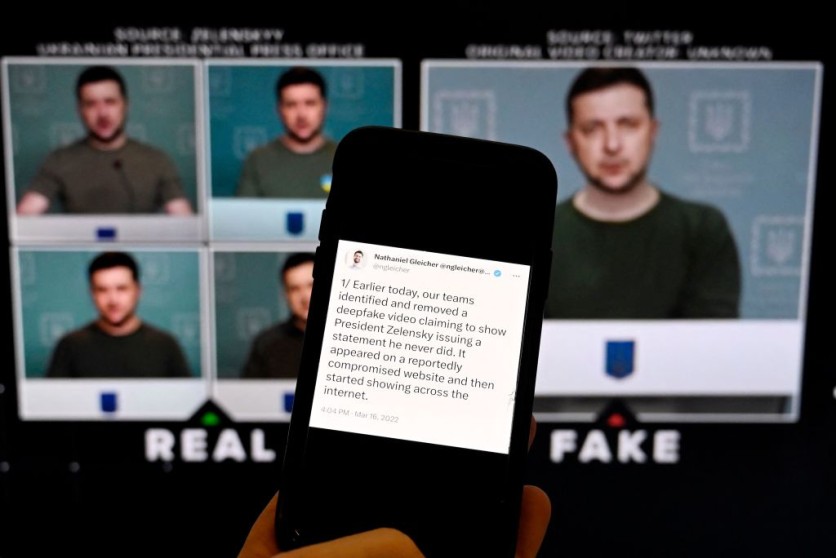

Artificial intelligence deepfakes continue to be as problematic as they could be as terrorist groups are now reportedly using the booming technology to spread propaganda, as claimed by experts.

Following an IS-claimed attack in Bamiyan province, Afghanistan, on May 17, which left four people dead, including three Spanish tourists, the Islamic State Khorasan (ISKP) group, an IS offshoot operating in Afghanistan and Pakistan, created a video in which an AI-generated anchorman appeared to read the news.

The Khorasan Diary, a website devoted to news and analysis on the area, claims that the computerized avatar posing as an anchor spoke Pashto and had features similar to those of locals in Bamiyan.

A different digital male news anchor claimed that Islamic State was responsible for a car explosion in Kandahar, Afghanistan, in a propaganda film produced by AI that surfaced on Tuesday.

According to Abi Najem, a cybersecurity specialist based in Kuwait, the use of AI has made it easier for an organization like IS to produce videos that are on par with Hollywood productions.

IS began using AI-generated news bulletins four days after the attack on a Moscow music hall on March 22, which resulted in the deaths of around 145 people. IS claimed responsibility for the incident. In the video, IS used a "fake" AI-generated news anchor to describe the Moscow attack.

According to research by experts at the International Center for the Study of Violent Extremism, including Mona Thakkar, IS proponents have been exploiting text-to-speech AI technologies and character-generation techniques to translate news bulletins from IS's Amaq news agency.

AI Advantages in Propaganda

Even though IS's ability to project power was significantly diminished as a result of its territorial losses in Syria and Iraq, analysts note that IS supporters see artificial intelligence as a substitute for traditional means of advancing their radical beliefs.

According to reports, generative AI technology has significantly changed how extremist groups conduct online influence operations. One such example is the use of AI-generated Muslim religious songs, or nasheeds, for recruitment efforts. This is according to researcher Daniel Siegel.

Early Terrorist Uses of AI

Terrorists have previously used AI, as reportedly noted by Tech Against Terrorism Executive Director Adam Hadley in November 2023. The group sees about 5,000 examples of AI-generated content per week, some of which are connected to Hezbollah and Hamas and seem to be intended to sway public opinion about the Israel-Hamas conflict.

A neo-Nazi messaging channel sharing AI-generated imagery created using racist and antisemitic prompts pasted into an app available on the Google Play store is another example that researchers at Tech Against Terrorism discovered at the time.

Other examples include far-right figures creating a "guide to memetic warfare" that instructs others on how to use AI-generated image tools to create extremist memes, and the Islamic State published a tech support guide on how to securely use generative AI tools.

Additionally, a pro-Islamic State (IS) outlet published several posters featuring images most likely generated by generative AI platforms. The researchers also discovered a pro-IS user of an archiving service who claimed to have used an AI-based automatic speech recognition (ASR) system to transcribe Arabic language IS propaganda.

(Photo: Tech Times)

ⓒ 2025 TECHTIMES.com All rights reserved. Do not reproduce without permission.