Google has recently upgraded several of its accessibility apps, making them more user-friendly for individuals with low vision or blindness.

These improvements include a new version of the Lookout app, which now offers enhanced text reading capabilities, and updates to the Look to Speak app. Additionally, Google has expanded accessibility features in Maps, benefiting users who require specific accommodations.

Revamped Lookout App: A Game-Changer for Low-Vision Users

The new version of the Lookout app by Google is a game-breaking advancement for people with low vision or blindness. Interestingly, this app can read text and lengthy documents aloud, identify food labels, recognize currency, and describe images and objects seen through the camera.

The latest update introduces a "Find" mode, which helps users locate specific items by choosing from seven categories such as seating, tables, vehicles, utensils, and bathrooms.

How Lookout's Find Mode Works

When a user selects a category, the app identifies related objects as the camera scans the environment, providing directions and distances to these objects. This feature enhances users' ability to interact with their surroundings.

What's more, Google has introduced an in-app capture button, allowing users to take photos and receive AI-generated descriptions instantly.

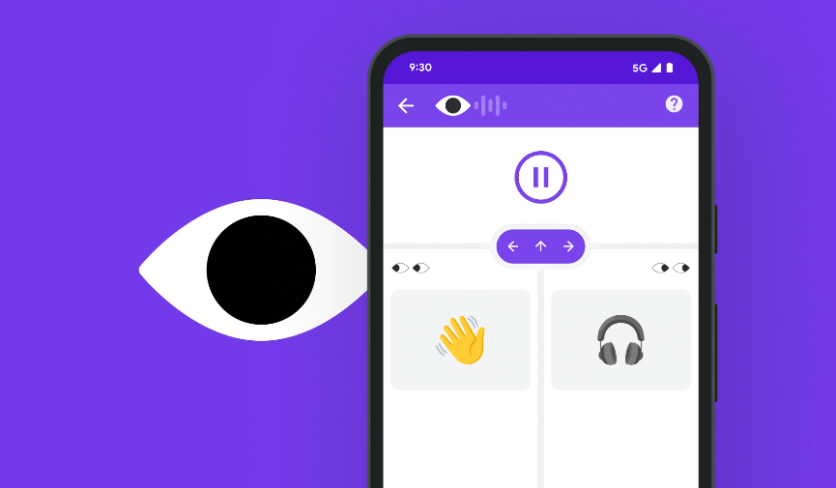

Look to Speak: Enhanced Communication Through Eye Gestures

According to Gizmodo, Google's Look to Speak app, which enables communication through eye gestures, has also been updated.

With the new changes, users can now trigger speech by selecting from a photo book containing emojis, symbols, and photos, thanks to the new text-free mode. This personalization allows users to define what each symbol or image represents, making communication more intuitive and effective.

Google Lens in Maps: Improved Navigation and Information

Google has expanded its screen reader capabilities for Lens in Maps, enabling it to name places and categories like ATMs and restaurants and provide distance information. Additionally, Google has enhanced detailed voice guidance, offering audio prompts to direct users accurately.

Accessible Places in Google Maps: Now Available on Desktop

Four years after its launch on Android and iOS, Google's Accessible Places feature is now available on desktop. This feature helps users determine if a location can accommodate their accessibility needs, such as having an accessible entrance, washrooms, seating, and parking.

Currently, Google Maps offers accessibility information for over 50 million places. Moreover, users on Android and iOS can filter reviews to focus on wheelchair access, streamlining the search for accessible locations.

Project Gameface: Empowering Hands-Free Control

At the recent I/O developer conference, Google announced that it has open-sourced more code for the Project Gameface hands-free "mouse."

A separate report by CNET tells us that this tool allows users to control the cursor using head movements and facial gestures, facilitating easier use of computers and phones for those with limited mobility.

The latest rollout of recent updates and new features across Google's accessibility apps proves that the search engine giant is also prioritizing the needs of individuals with disabilities. These advancements not only improve everyday tasks for low-vision and blind users but also promote greater independence and accessibility in various aspects of life.

ⓒ 2025 TECHTIMES.com All rights reserved. Do not reproduce without permission.