Microsoft and OpenAI have launched a $2 million fund to combat deepfakes and fraudulent AI content in response to the growing threat of AI-generated disinformation. Global democratic integrity is the initiative's goal.

Concerns over AI-driven misinformation have escalated to a critical level as an unprecedented 2 billion people prepare to vote in 50 nations this year. Misinformation particularly targets vulnerable groups.

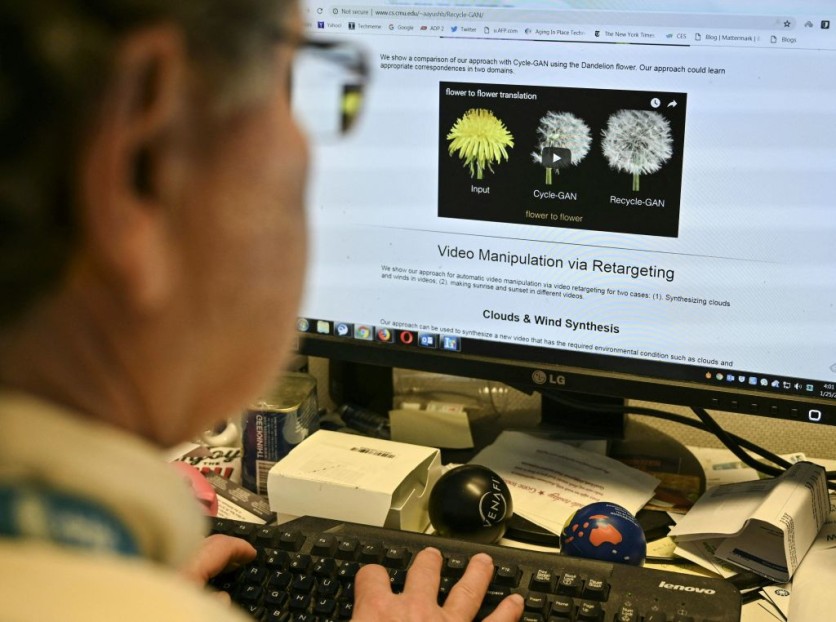

The rise of generative AI technologies, such as chatbots like ChatGPT, has greatly increased the tools available for creating deepfakes. These publically available technologies have the potential to fabricate videos, photos, and audio samples of political personalities.

Major Tech Firms Collaborate to Fighting Fake News, AI Disinformation

According to a report from TechCrunch, leading tech firms like Microsoft and OpenAI have made voluntary promises to reduce AI-generated disinformation threats. Collaboration is also ongoing to standardize deepfakes designed to deceive voters. Major AI organizations have mitigated these risks in their systems. Google's Gemini AI chatbot cannot answer election-related questions, and Meta, Facebook's parent company, has a similar restriction.

OpenAI has released a deepfake detection tool to assist academics in identifying fake material generated by the DALL-E image generator. The company has joined the Coalition for Content Provenance and Authenticity (C2PA) steering group, joining Adobe, Google, Microsoft, and Intel in fighting disinformation.

The newly formed "societal resilience fund" is crucial to the campaign to promote ethical AI use. Microsoft and OpenAI will grant this fund to Older Adults Technology Services (OATS), C2PA, International IDEA, and PAI. These funds will assist AI literacy and education programs, especially for disadvantaged populations.

Microsoft Corporate Vice President for Technology and Corporate Responsibility Teresa Hutson stressed the importance of the Societal Resilience Fund in AI-related community projects. She stressed Microsoft and OpenAI's commitment to working with like-minded companies to combat AI disinformation.

Accessible AI technologies raise worries about a rise in politically driven disinformation on social media, a hitherto inconceivable scenario. AI may complicate this year's several election cycles due to entrenched ideological splits and a growing distrust of internet content.

Addressing AI Generated Deepfakes Remains a Big Challenge to Authorities

While the latest artificial intelligence technologies are impressive in quality, most deepfakes, particularly those originating from Russia and China for global influence efforts, quickly lose credibility. According to Politico, experts noted that AI-generated information, no matter how convincing its photographs, movies, or audio samples, is unlikely to change most people's political views.

Recent elections in Pakistan and Indonesia have widely utilized generative AI, but there is no evidence that it has unfairly favored certain candidates. Every day, social media publishes an abundance of information that makes AI-powered frauds, even lifelike ones, struggle to gain momentum.

AI-generated misinformation operations already impacted the US, according to a report from CNN. New Hampshire robocalls using a fake Joe Biden voice tried to deter Democrats from voting in the primary. In January, social media disseminated fake AI-generated photographs of former US President Donald Trump with adolescent girls aboard Jeffrey Epstein's plane. In February, a deep-fake Twitter video showed a leading Chicago Democratic mayoral contender as unconcerned with police shootings.

Law enforcement agencies struggle to respond to deep-fake threats, according to senior US officials. Foreign deepfakes may draw rapid public declarations from authorities like the FBI or Department of Homeland Security, but Americans' involvement complicates the reaction since officials fear seeming to influence elections or restrict free expression.

States have responded differently to AI deepfakes. California, Michigan, Minnesota, Texas, and Washington have passed election-deepfake laws. Minnesota's law prohibits spreading deepfakes to hurt candidates within 90 days of an election. Michigan requires campaign disclosure of AI-manipulated media.

According to the nonprofit consumer advocacy group Public Citizen, many US states have deep-fake regulatory bills pending.

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.