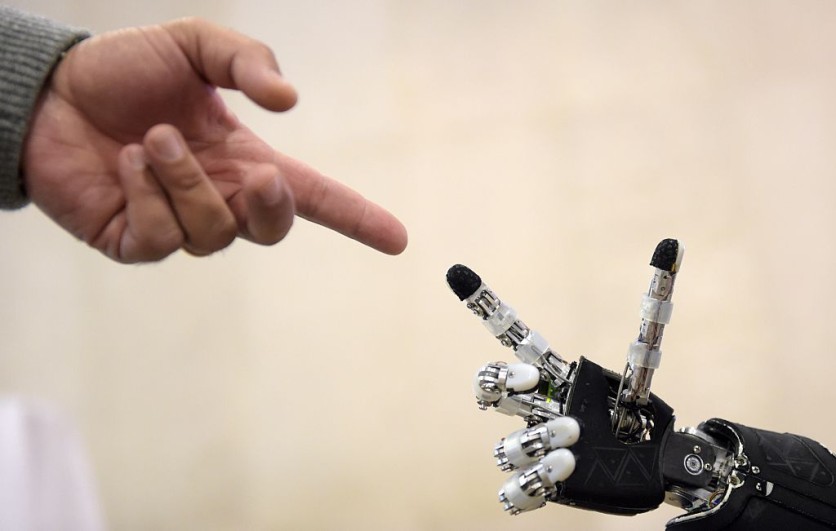

The future for people living with arm paralysis might be able to ease their daily routine after a new technique was introduced by researchers, which could let them control robotic arms through a brain-machine interface.

Robotic Arms Controlled Via Brain-Machine Interface

This new method was developed by researchers from Johns Hopkins University, which enabled a man with partial arm paralysis to feed himself using the brain-machine-powered bionic arms.

As reported first by Engadget, the technology entails following particular commands, such as choosing "cut position" and slight fist motions from the user to cause the robotic arms to follow the command.

The man in the experiment had simply made fist gestures to order the arms into cutting and delivering the food to his mouth. According to the research team, he could consume dessert in just a matter of 90 seconds.

The research acknowledged that even though there is progress in brain-machine interfaces and intelligent robotic systems in helping people living with sensorimotor deficits, they claimed that these technologies are yet to address problems such as fine manipulation and bimanual coordination.

Hence, they sought to provide a solution to these problems by creating a "collaborative shared control strategy" that can control and coordinate two Modular Prosthetic Limbs (MPL) that are needed for self-feeding.

"A human participant with microelectrode arrays in sensorimotor brain regions provided commands to both MPLs to perform the self-feeding task, which included bimanual cutting. Motor commands were decoded from bilateral neural signals to control up to two DOFs on each MPL at a time," the research team wrote in their paper.

In simpler terms, the new approach is based on a shared control scheme that limits the level of mental effort needed to do a task.

The Experiment's Findings

The man in the experiment was able to assign each of his two hand's four degrees of freedom of movement to as many as 12 degrees of freedom when directing the robot arms. The effort was also decreased by the limbs' prompt-based intelligent reactions.

The participant effectively and consistently controlled motions of both robotic limbs to cut and eat food in a challenging bimanual self-feeding task using neurally-driven shared control, according to the research's findings.

The study further claims that demonstrating the bimanual robotic system control through a brain-machine interface can bear significant implications in bringing back the complex movement capabilities of persons living with sensorimotor deficits.

However, Engadget noted that the technology is still in its infancy. The researchers are seeking to include tactile and visual feedback for the new technology. They also want to decrease the requirement for visual confirmation while increasing effectiveness and precision.

However, the team believes that in the long run, robotic arms like these will help people with disabilities regain sophisticated movements and gain greater independence.

Related Article : Google's 'Sentient AI' Is Like a 7-Year Old That Can Do 'Bad Things,' According to Engineer

This article is owned by Tech Times

Written by Joaquin Victor Tacla

ⓒ 2026 TECHTIMES.com All rights reserved. Do not reproduce without permission.