On Thursday, April 8, Intel announced Bleep, a new AI-powered tool that the company hoped would reduce the amount of toxicity found in voice chat between gamers. According to Intel, the app "adopts artificial intelligence (AI) to detect and redact audio based on the user preferences." It acts as an additional layer of moderation on top of what a platform or service already provides.

What Does Bleep Do?

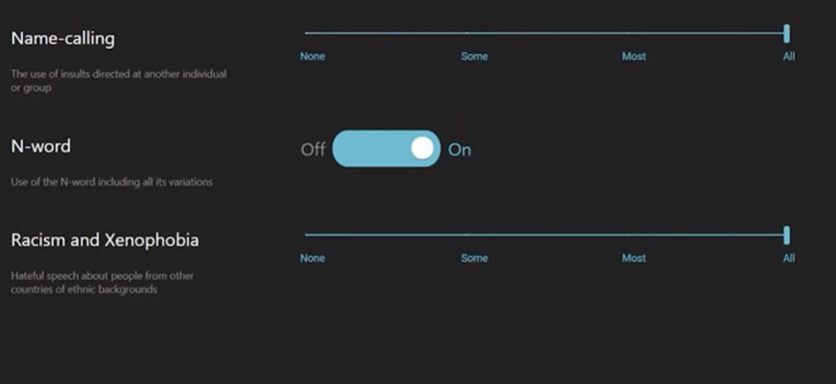

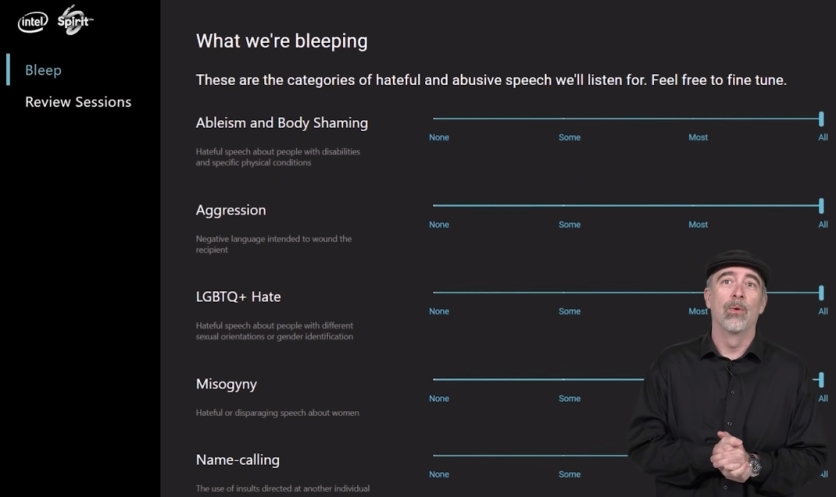

According to TheVerge, Bleep offers users three options: none, some, most, or all of this offensive language be filtered out. In Intel's interface, players can opt to add a little bit of aggression or name-calling into their online gaming.

The program is designed to allow users to recognize and eliminate toxic speech in their voice chats as a "key step" toward reducing toxicity in online gaming, Roger Chandler, Intel vice president, said in mid-March during a virtual showcase for the program Forbes reported in an article.

The settings in the showcase are a first attempt at the product, and developers will make changes during beta testing so that some categories could be changed from a sliding scale to an on/off option.

Intel first revealed that Bleep technology was in the development phase in 2019. It has since been produced with Spirit Artificial Intelligence, whose artificial intelligence technology is already used to identify toxicity in games.

Read Also: 'Among Us' New Map and Features; Air Ship, Cosmetics, 15 Players Expansion Soon!

Why Was Bleep Made?

Bleep is designed to address a widespread issue of harassment within online gaming platforms. According to a 2020 study by the Anti-Defamation League, 81% of U.S. adults have been harassed in some way in the online multiplayer game setting.

The gaming industry has been challenged by the recent racial justice movement to address the issue of harassment and discrimination on their platforms. Conversely, Twitch announced that the company would change its policy on harassment to take actions against users who commit "severe misconduct," even if they occur off the platform.

According to Forbes, Bleep aims to combat hatred and discrimination through artificial intelligence. Still, studies have shown that AI technology usually serves to reaffirm existing systemic biases such as racism and sexism.

In a study published in April 2020 by the National Academy of Sciences, for instance, the journal found that multiple automated speech recognition programs "exhibited substantial racial disparities" and that error rates for black speakers were higher than for white speakers.

This could be a substantial issue for Bleep, and its developers were sensitive to the possibility arising. The developers have stated that a diverse group of people is developing the Ai, but they do not believe it will be perfect. The team has acknowledged that artificial intelligence can be problematic. However, this platform aims to give users as much control as possible to navigate such a "nuanced environment."

Related Article: YouTube Cracks Down on Illegal Content With Artificial Intelligence

This article is owned by Tech Times

Written by Lionell Moore

ⓒ 2025 TECHTIMES.com All rights reserved. Do not reproduce without permission.